Getting Started with Multi-LLM Gateway

What is Multi-LLM Gateway

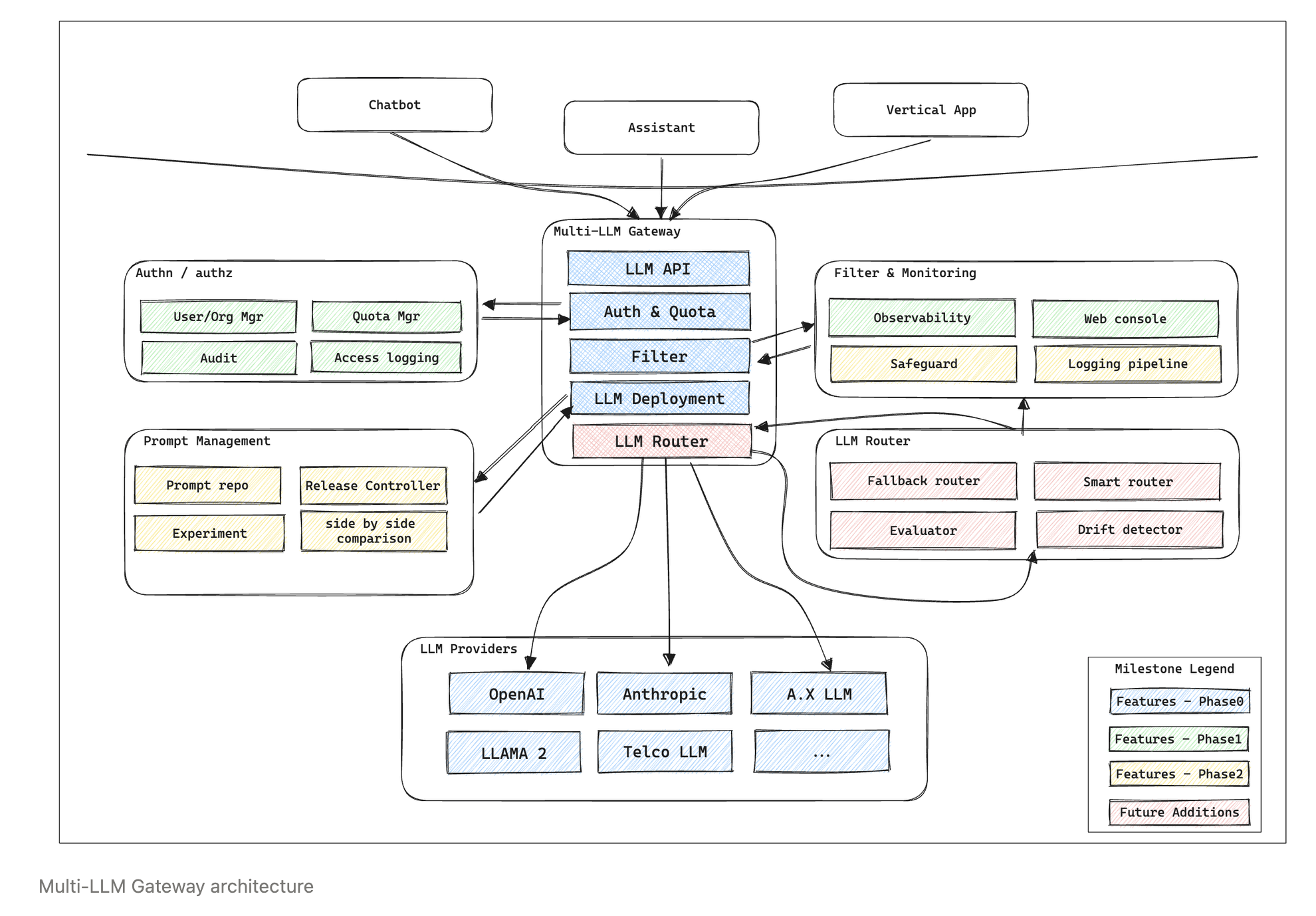

The Multi-LLM Gateway is a unified interface designed to facilitate seamless interaction with multiple Large Language Models (LLMs) from various providers. Its primary goal is to address the challenges and complexities arising from diverse interfaces and feature sets offered by different LLM providers.

Motivation

At the forefront of our perspective stands a foundational belief: no single LLM can adequately address every problem. Hence, the utilization of multiple LLMs becomes not only beneficial but necessary. This underscores the critical importance of having integrated interfaces for a seamless user experience. As the landscape of LLM services expands, various companies have embarked on the journey of developing and offering these services. However, with the variety comes complexity. The interfaces provided by different LLM Providers can vary dramatically. Even if they convey the same essential meaning, discrepancies in field names and the features offered by each model present challenges. From a user's standpoint, this translates into the added burden of developing client-side applications uniquely tailored to each LLM Provider. Most users primarily need a prompt and some sampling options, but the variance in interfaces necessitates multiple adjustments.

To mitigate this, there's a concerted effort to unify these interfaces, aiming to alleviate the development costs for users. The Multi-LLM Gateway emerges as a solution, designed to absorb the cost of adapting to the ever-evolving interfaces of diverse providers. This gateway offers both cost savings and convenience. Through this harmonized approach, users can both experiment with and leverage models from an array of providers without altering their foundational code. The simplicity of switching a model name enables them to test, verify, and subsequently choose the most optimal model for their services.

By using this unified interface, users can easily integrate with, access, and compare multiple LLMs at the same time. This adaptability shines especially when there's a desire to deploy multiple LLMs in real-life scenarios or when drawing comparisons between model outcomes. Additionally, the presence of a coherent management system paves the way for standardized configurations, management protocols, and timely updates spanning all LLM services.

Beyond this, the Multi-LLM Gateway is equipped with caching capabilities for responses. This ensures swift response times for recurrent queries, optimizing resource consumption in the process. A final layer of safety features acts as a shield, safeguarding against adversarial prompting. This suite of functionalities not only amplifies the power and adaptability of the Multi-LLM Gateway but also serves a vast spectrum of user needs.

Updated 3 months ago